All You Need To Know For Moving To HTTPS

Last updated on November 28th, 2019. First published on October 11th, 2016.

As Google's initiative is to get more websites to use HTTPS and Snowden’s revelations, the number of websites moving to HTTPS have steadily been growing. The switch to HTTPS is a major technical challenge, which is not to be underestimated. From an SEO point of view, it requires resource allocation, long-term planning and preparation, water tight execution, and is never free of risk.

This guide instructs beginner and advanced website owners how to move from HTTP to HTTPS, from a SEO perspective, by discussing why it is important to move to HTTPS, the strategy of picking the right SSL certificate and how much of the website to move at once, identifies the most common technical on-page signals that need to be updated to HTTPS to avoid sending conflicting signals to search engines, how to configure Google Search Console for the move to HTTPS, how to monitor the impact of the move with Google Search Console and the server log files, and improve the overall performance signals towards the user with HSTS and HTTP/2.

What is HTTPS and why care?

If you have a website, or visit websites online, you have to care about HTTPS. HTTPS, short for "Hypertext Transfer Protocol over TLS", is a protocol that allows secure communication between computer networks, such as the browser on a local computer or the server serving the content being accessed. Every website on the "World Wide Web" uses either HTTP or HTTPS. For example, this website uses the HTTPS protocol (as illustrated in the address bar).

Adding the 'S' to HTTP

Adding the 'S' to HTTP

The benefits of HTTPS are numerous, but the ones that stand out for regular users are:

- Security, for example, HTTPS prevents man-in-the-middle attacks;

- Privacy, for example, no online eavesdropping on users by third parties;

- Speed (getting into this later.)

For website owners, HTTPS also brings a number of advantages to the table, which are as follows:

- Security, which allows processing of sensitive information such as payment processing;

- Keeps referral data in Analytics, as HTTP websites that have visitors coming from a HTTPS website to a HTTP website lose their referral data of those visitors. However, HTTPS website retain their referral data from visitors coming from either a HTTP or HTTPS website;

- Potentially improves rankings in Google Search Results;

- HTTP websites show as insecure in future browser updates, whereas HTTPS shows as secure;

- Speed (getting into this later.)

However, HTTPS is not without challenges, which is why until recently only a medium percentage of the World Wide Web has been using this secure protocol - but this number is growing steadily. Since early 2017 Mozilla reports, through Let's Encrypt, that over 50% of web pages loaded by Firefox to be over HTTPS and over 50% of the top 100 sites of the HTTP Archive also serve their content over HTTPS.

Some of the challenges that HTTPS involve are:

- Additional cost, as commercial SSL certificates cost money, and also modern server infrastructure is necessary to not add a RAM/CPU cost;

- Technical complexity, as implementing SSL certificates on a server is far from easy, and until recently, one HTTPS-enabled domain used to require an unique IP address - luckily, there is now the Server Name Indication, which is supported by most major browsers;

- Switching from HTTP to HTTPS is considered to be a content move by search engines, resulting in lower rankings until all new HTTPS and old HTTP URLs have been re-crawled and reprocessed;

- Conflicts with the original design of the World Wide Web, where the additional "S" in the HTTPS protocol breaks hyperlinks (a fundamental pillar of the World Wide Web);

- Switching to HTTPS is a long term strategy. Once committed to this strategy, it will be difficult not to operate a HTTPS version of the website. Even if the HTTPS version redirects only traffic back to the HTTP version, a HTTPS version needs to be kept live to continue redirecting external link juice and visitors to the HTTP version. In other words, once a website is live and has operated for at least a little while on HTTPS, it is unlikely that it will ever be able to shut down the HTTPS or HTTP version as long as the website is up and running.

However, the long-term benefits do outweigh the challenges. This guide primarily focuses on addressing the content move challenge from a Search Engine Optimization point of view.

Kristopher Jones

[Founder and CEO of LSEO.com]

More than ever people are concerned about security and HTTPS provides additional security to users and therefore builds trust between them and your website. It's also important to realize that Google prefers sites that are trusted and certified. In fact, Google has openly suggested sites that are secure w/ HTTPS will be given a "boost" in search rankings. There are more obvious SEO benefits to moving to HTTPS. For instance, when traffic passes to an HTTPS site, the secure referral information is preserved. This sport of kind of reverses the dreaded "not provided" that makes it nearly impossible to properly attribute traffic and sales to specific keywords. If you haven't already I strongly advise you to move your website to HTTPS.

Getting ready with SSL Certificates

Before going further into the SEO aspects of moving to HTTPS, let's make sure the setup of the server is correctly implemented and nothing stands in the way to continue with the content move.

Buy a commercial SSL certificate

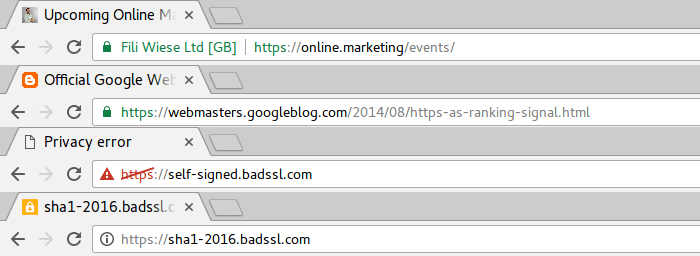

Although it is possible to use self-signed SSL certificates or free community-provided SSL certificatesand most public SSL certificate types do have a certificate authority behind it, the one thing that commercial SSL certificates can offer in addition are extended validated SSL certificates (EV). These EV SSL certificates requires additional verification of the requesting entity's identity and can take some additional legwork to get approved. Users see this reflected as green bars with company names in the browser address bar.

When choosing a SSL certificate type, keep in mind that community-provided SSL certificates are still in its early days and that prices for commercial SSL certificates have dropped significantly to lower than $10 USD per year per SSL certificate in the last few years. As such, using commercial SSL certificates for now is recommended for commercial websites, as later it is still be possible to switch to other options.

When choosing a commercial SSL certificate, also consider that there are several validation types of SSL certificates that can be bought/used. Any certificate is fine in principle. In my experience for Google, it makes no difference and any SSL certificate is fine, but it can make a difference for the users of the website.

Google Chrome displaying different HTTPS URLs

Google Chrome displaying different HTTPS URLs

Encryption

Several options are available when creating a SSL certificate (commercial or self-signed). It is better to choose a SHA2 certificate (e.g. SHA256) as this is more secure than a SHA1 certificate. SHA1 certificates have been deprecated for this reason by most major browsers and websites using SHA1 certificates now appear as insecure, thereby defeating the purpose of using HTTPS.

Server Implementation

To implement the SSL certificates on the server infrastructure, check with the hosting provider, the IT team or the web developers.

If using Server Name Indication, double check in the Analytics data of the current website if certain old browsers, which do not support SNI, still frequently visit the website.

Once the server has been setup with a SSL certificate for the domain name on port 443, the setup and the server environment needs to be checked and validated. The SSL certificate can be validated using this tool, and the server setup can be checked with this tool. All errors, if any, have to be resolved before continuing.

Note: To avoid any issues while updating the website for the move to HTTPS in the next steps, it is recommended to create a separate home directory on the same server or another server instance and forward the HTTPS traffic to this.

Larry Kim

[CEO of MobileMonkey, Founder of Wordstream]

Be sure to register the SSL certificate for a long time (5 years or so) so that you don’t have the problem of expiring certificates every year.

Preparing for the move to HTTPS

Before discussing the next steps, this guide is based on a few assumptions:

- No changes to the content (except link annotations) are made;

- No changes to the templates (except link annotations) are made;

- No changes to the URL structure (except for the protocol change) are made;

- The HTTPS version of the domain name is live on port 443, and validated as described in the previous chapter. Most likely, the root of the domain name on HTTPS returns an empty directory listing.

If any of the first three mentioned assumptions are incorrect, then be sure to read "How to move your content to a new location" on the official Google Webmaster Blog.

Define a content move strategy

The next step is to choose a strategy for moving to HTTPS. Moving a small website (e.g. less than ten thousand URLs) or a large website (e.g. more than one million URLs) to HTTPS can result in different options for moving content, for example:

- Move the entire domain to HTTPS, including all subdomains, in one go;

- Move only one or more subdomains and/or subdirectories to HTTPS, before moving the rest;

- Move the content and operate two duplicate sites on HTTP and HTTPS, before finalizing the move.

As part of the strategy, the following question also needs to be answered: How long will the HTTP version still be accessible?

The factors to consider will be different depending on the size of the website, the availability of the IT support team, and the organizational structure behind the website (e.g. the internal company politics). While this guide cannot address the last two points for every website, the first point is definitely something to consider from an SEO point of view. This translates to the available crawl budget.

Importance of Crawl Budget

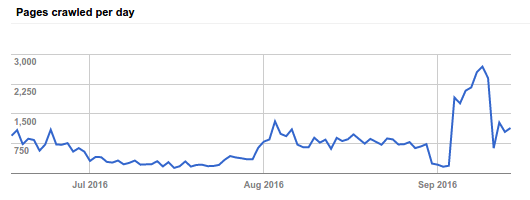

In order for search engines to process the protocol change, its bots have to re-crawl a significant part of the HTTP URLs and all of the new HTTPS URLs of the website. So, a website with 1.000.000 URLs will require search engine bots to roughly re-crawl at least 2.000.000 URLs (or a significant amount of this) to pick up the 301 redirects and recalculate the rankings for the new HTTPS URLs, based on the history of the HTTP URLs. If a search engine bot crawls on an average of 30.000 unique and non-repeated URLs per day of the website, it can take roughly 67 days to re-crawl all URLs. During this time, the website may suffer in search engine rankings, assuming there are no "crawl budget wasted URLs."

Example how many pages Googlebot crawls per day

Example how many pages Googlebot crawls per day

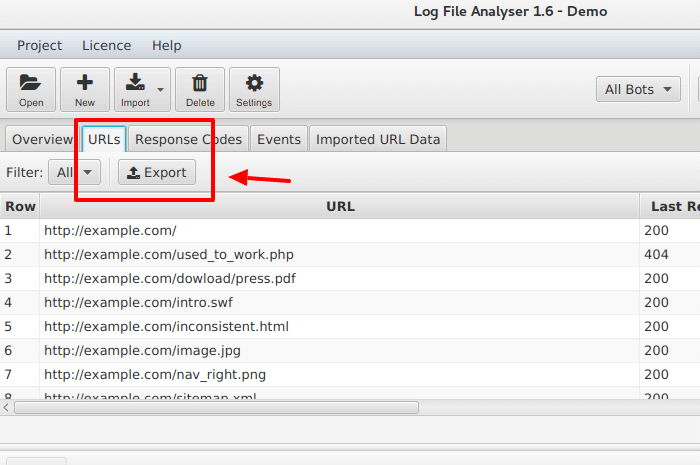

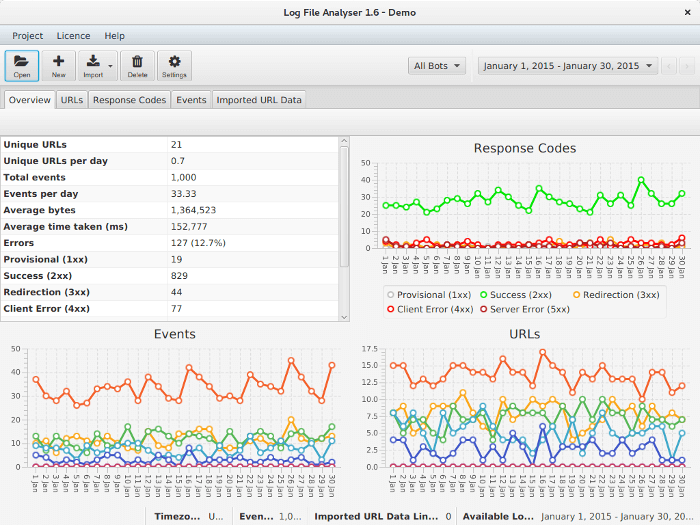

Utilize Server Log Files

To make sure search engine bots do not waste crawl budget, first double check the Server Log Files and find out which URLs have been crawled by each search bot in the last two years (or longer, if possible). It will also be helpful to know how often each URL was crawled (to determine priority), but for this process all the URLs are needed anyways. Moving forward, this guide primarily focuses on crawl data from Googlebot.

For smaller sites, Screaming Frog Log Analyser can do this task rather easily. For larger websites, talk to the IT team and/or utilize a big data solution such as Google BigQuery to extract all URLs.

Example on how to export URLs from server logs using Screaming Frog Log Analyser

Example on how to export URLs from server logs using Screaming Frog Log Analyser

It may also be necessary to ask the hosting provider of the website for the log files. If there are no log files available for the last two years (assuming the website is not brand new), start logging as soon as possible. Without log files, the organization will miss out on vital and crucial SEO data, and ignore important analytical business data.

Save the extracted URLs in a separate file (one URL per line), for example, as logs_extracted_urls.csv.

Extract Sitemap URLs

Assuming the website has one or more XML Sitemaps, and these sitemaps contain all the unique canonicals of the indexable pages of the website,Google Search Console will report how many URL of the XML Sitemaps are currently submitted to Google. Download and extract all the unique URL from the XML Sitemaps.

Save the extracted URL in a separate file (one URL per line), for example, as sitemap_extracted_urls.csv.

How much of the website to move?

At this point, there is enough data to determine the next step: How much of the website to move to HTTPS?

To calculate the number of URL to move, gather the following data:

- A list of unique URL crawled by Googlebot extracted from the Server Log Files;

- A average number of how many URL Googlebot crawls per day, based on numbers from Crawl Stats in Google Search Console, and the Server Log Files;

- A list of unique URL extracted from the XML Sitemaps.

If the average number of URL crawled by Googlebot per day (based on the Server Log Files) is anywhere between the 5% and 100% compared to the total size of unique URL extracted from the XML Sitemaps, it is relatively safe to move the entire domain in one go to HTTPS . Chances are, in this case, that the entire domain will be re-crawled by search engine bots within three to four weeks - depending on the internal linking structure and several other factors. Let's call this scenario 1: "move in-one-go."

If the average number of URL crawled by Googlebot per day is anywhere between 1% and 5% compared to the total size of unique URL extracted from the XML Sitemaps, it is safer to move one or more subdomains and/or subdirectories in phases to HTTPS . Chances are that, in this case, it will take a long time for Googlebot to re-crawl all URL of the entire domain, and as such it may take longer than the standard few weeks to recover in Google Search Results. Let's call this scenario 2: "partial move." This phase is repeated as many times as necessary until the entire domain has been moved to HTTPS .

If the site is really big, then another option is on the table. This option involves operating two websites next to each other, one on HTTP and one on HTTPS. While waiting for a significant number of URL to be re-crawled, the canonicals are used to move the content. For this to work, website owners are dependent on the website canonicals signals to be trusted by search engines. Let's call this scenario 3: "move through canonicals." Once adequate number of URL have been re-crawled, scenario 1 or 2 can be applied to finalize the move to HTTPS.

Crawl the HTTP website

Next, utilize a crawler, such as Screaming Frog SEO Spider, DeepCrawl, Botify or OnPage.org to crawl the entire website or relevant sections of the current website on the HTTP protocol, and extract all unique URL that search engines can crawl, which are internally linked. This includes all assets internal to the website, such as robots.txt, Javascript, Image, Font, and CSS files. This data will be necessary towards the end, to double check if the move has been successful.

Save the extracted URL in a separate file (one URL per line), for example, as crawl_extracted_urls.csv.

Note: If the website is too big, e.g. more than ten million URL, either the "partial move" or "move through canonicals" scenario is recommended because it is the safest course of action to pursue. Try to split up the website in manageable sections, based either on the subdomains and/or subdirectories, and crawl these one by one instead to get as many unique URL as possible.

Blocking Search Engine Bots

In case of the "move in on-go" and "partial move" scenario, and depending of the size of the website, and how quickly the next steps can be completed, it may be useful to block search engine bots from crawling the HTTPS website while this one is being set up to prevent the possibility of sending conflicting signals to search engines. This can be done by utilizing robots.txt on the HTTPS version, and the entire HTTPS version can be blocked from being crawled, or just a part of it. Use the following code snippet in the robots.txt on the HTTPS version to block all bots completely:

User-Agent: *

Disallow: /

Note: This step is optional and heavily dependent on how quickly the website can be moved. If it can be moved in less than a few days, there is no need for this. This method can also be used to safely test most aspects of the move, before letting search engine bots know about the move.

In case of the "move through canonicals" scenario, this particular step is not recommended.

Cyrus Shepard

[Co-creator of Fazillion Media]

Transitioning to HTTPS is important, but it can also be hugely challenging, even to those who consider themselves technically competent. The challenges fall roughly into 2 camps:

- Purchasing and installing the certificate: This is mostly a challenge for those who are less technical, or with less experience setting up their hosting environment. Thankfully, many services are making this easier today. Services like Cloudflare, Let's Encrypt SSL, and many hosting providers are providing one-click solutions that are oftentimes free of charge.

- The second challenge is simply the vast technical complications involved when making sure all of your web assets are secure (basically meaning all images, plugins, javascript, and everything else behind the scenes is served via HTTPS). For small sites, this isn't too difficult. For larger, older sites the process of tracking down and fixing all the assets can literally take weeks and months. Tools like Screaming Frogs "Insecure Content Report" and various Wordpress plugins make the job easier, but it's definitely something every single person faces when migrating to HTTPS.

There are many other challenges as well, but once these two basic steps are conquered the rest can usually be handled with ease.

Copying and Updating Content

Duplicate the content of the HTTP version to the location of the HTTPS version, including the XML Sitemaps and all other files. Often, this just involves copying the content of one directory to another directory on the same server.

Once this has been done, the HTTPS version needs to be updated. The following suggested changes only apply to the HTTPS version and not the still live HTTP version, unless specified otherwise.

Canonicals

Update all the canonicals to absolute HTTPS URL on the HTTPS version.

<link href="http://www.example.com/deep/url" rel="canonical" />

Becomes

<link href="https://www.example.com/deep/url" rel="canonical" />

Avoid using relative URL in canonicals. If currently no canonicals are present on the site, change this and first implement canonicals before proceeding. Also, be sure to update the canonicals on the mobile version of the website to the HTTPS version. To learn more about canonicals, click here.

Pagination

If pagination is used on the website, update these to absolute HTTPS URL on the HTTPS version.

<link href="http://www.example.com/deep/url?page=1" rel="prev" />

<link href="http://www.example.com/deep/url?page=3" rel="next" />

Becomes

<link href="https://www.example.com/deep/url?page=1" rel="prev" />

<link href="https://www.example.com/deep/url?page=3" rel="next" />

For more information about pagination, visit this documentation.

Alternate Annotations

There are several alternate annotations that can be implemented on a website, and they all need to be updated.

Hreflang

If the website uses Hreflang annotations in either the XML Sitemaps and/or the website, these need to be updated to the absolute HTTPS URL on the HTTPS version.

<link rel="alternate" hreflang="x-default" href="http://www.example.com/" />

<link rel="alternate" hreflang="es" href="http://www.example.com/es/" />

<link rel="alternate" hreflang="fr" href="http://www.example.com/fr/" />

Becomes

<link rel="alternate" hreflang="x-default" href="https://www.example.com/" />

<link rel="alternate" hreflang="es" href="https://www.example.com/es/" />

<link rel="alternate" hreflang="fr" href="https://www.example.com/fr/" />

For more information about Hreflang, visit this documentation.

Mobile

If there is a separate mobile website, it is likely that mobile alternate annotations may be present on the website.

<link rel="alternate" media="only screen and (max-width: 640px)" href="http://m.example.com/page-1">

Becomes

<link rel="alternate" media="only screen and (max-width: 640px)" href="https://m.example.com/page-1">

For more information about alternate URL for mobile, visit this documentation.

Feeds

Alternate annotations to Atom or RSS or JSON feeds also need updating on the website.

<link href="http://www.example.com/feed/rss/" rel="alternate" type="application/rss+xml" />

<link href="http://www.example.com/feed/atom/" rel="alternate" type="application/atom+xml" />

<link href="http://www.example.com/json.as" rel="alternate" type="application/activitystream+json" />

Becomes

<link href="https://www.example.com/feed/rss/" rel="alternate" type="application/rss+xml" />

<link href="https://www.example.com/feed/atom/" rel="alternate" type="application/atom+xml" />

<link href="https://www.example.com/json.as" rel="alternate" type="application/activitystream+json" />

Internal Links

If the website uses only relative internal links, including in Javascript and CSS files, you can skip this step.

Internal links are important for the user and search engines, and most websites also depend heavily on assets, such as Javascript, CSS, Web Fonts, Video and Image files, including a favicon. All these internal links and internal references can be found throughout the HTML source and may also contain internal links inside the assets, e.g. image file references in CSS files or internal URL in Javascript files.

For example, the following type of internal links need to updated:

- Links to other internal URL inside the HTML source code;

- Links to internal Image files inside the HTML source code;

- Links to internal Video files inside the HTML source code;

- Links to internal Web Fonts inside the HTML source code;

- Links to internal Javascript files inside the HTML source code;

- Links to other internal URL inside the Javascript files;

- Links to internal Image files inside the Javascript files;

- Links to internal CSS files inside the Javascript files;Links to internal CSS files inside the HTML source code;

- Links to internal CSS files inside the HTML source code;

- Links to internal Image files inside the CSS files;

- Links to internal Web Fonts inside the CSS files;

- And any other internal link.

To do this update, there are a few options.

Option 1

Switch to using only relative URL, for example:

<a href="http://www.example.com/">home</a>

Becomes

<a href="/">home</a>

This option may conflict with internal links to assets, especially when defined in CSS and/or Javascript files. Also, it may be useful to define a base tag URL in the top of the HEAD of the HTML source code with this option.

<base href="https://www.example.com" />

Option 2

Change the protocol on absolute internal URL from HTTP to HTTPS, for example:

<a href="http://www.example.com/">home</a>

Becomes

<a href="https://www.example.com/">home</a>

Option 3

Remove the protocol on absolute internal URL, for example:

<a href="http://www.example.com/">home</a>

Becomes

<a href="//www.example.com/">home</a>

This option makes links dependent on the protocol of the URL visited.

For search engines and end users, it does not really matter which of the three options mentioned above is used, as search engine bots and browsers tend to be smart enough to figure out the final absolute URL. However, using option 2 will be playing it safest.

WordPress

Websites running on the popular WordPress platform may find this plugin or this search and replace the database script useful to quickly update any internal links within the database. However, don't forget to update the theme files and the General Settings as well. More best practices for changing to HTTPS with WordPress, can be found here.

Internal Redirects

If any of the internal links point to an internal redirect to another internal URL, it is recommended to reduce the redirect chain, and instead improve the internal linking structure by linking it directly to the canonicals of the HTTPS end destination, instead to the internal redirect.

In addition, the internal linking structure needs to be updated to point to the right URL to avoid any redirect chains. For example, avoid a situation where a HTTPS URL (a) links to a HTTP URL (b), which then redirects back to another HTTPS URL (c), or worse, back to the original HTTPS URL (a).

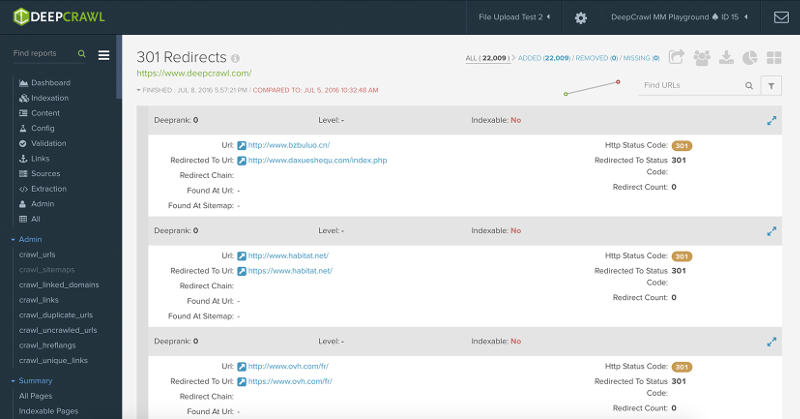

Example of all redirects as found by DeepCrawl

Example of all redirects as found by DeepCrawl

Updating CDN Settings

Often, links to assets are used to render a URL, such as Javascript, Image and CSS files, which can be loaded from a CDN (Content Distribution Network) that may or may not be under the control of the owner of the website. Any link references to the assets loaded from the CDN need to be loaded from HTTPS. Also, in this case, it is possible to remove the protocol from the absolute URL, for example:

<script src="https://ajax.googleapis.com/ajax/libs/jquery/3.1.0/jquery.min.js"></script>

Becomes

<script src="//ajax.googleapis.com/ajax/libs/jquery/3.1.0/jquery.min.js"></script>

This does mean that the CDN needs to be enabled to serve the assets over HTTPS. If the CDN is mapped to a subdomain of the website, and most likely, is under control of the owner of the website, then the same SSL certificates may need to be uploaded to the CDN and used for every request (depending on the type of SSL certificate).

If the original asset source file is accessible on the HTTPS version, and most often linked to the CDN version on HTTPS, it is important to canonicalize the CDN version on HTTPS back to the asset source file on the HTTPS version using HTTP Headers. For example, the asset on the CDN linked from the HTTPS version:

<img src="//cdn.example.com/image1.png" />

Needs to return a link reference with a canonical to the asset source file on the HTTPS version in the HTTP Header response:

Link: <https://www.example.com/image1.png/>; rel="canonical"

This will communicate to search engines that the asset source file on the HTTPS version is the original version and avoid potential duplication issues with the CDN asset file.

When updating the CDN settings, to avoid any weird potential conflicts on the website, be sure to remove the cached data at the CDN.

XML Sitemaps

Assuming there are an XML Sitemaps present from before the move (if not, be sure to create/export one based on the initial crawl), this one has to be accessible on the HTTP version. Leaving the original XML Sitemaps live on the HTTP version makes it possible to track the indexation status on Google Search Console (under Sitemaps), which proves to be useful as the old URL get crawled and re-indexed on the HTTPS version.

Make sure no redirects or non-existing or non-indexable URL are listed in the XML Sitemaps on the HTTP version, just the canonicals of indexable pages. Otherwise, the submission versus indexed numbers become unreliable.

Updating the XML Sitemap

Next, copy the XML Sitemaps of the HTTP version and save it as new files. In the new XML Sitemaps, change the protocol of each URL mentioned within the <url> block. For example:

<url>

<loc>http://www.example.com/</loc>

<xhtml:link rel="alternate" hreflang="x-default" href="http://www.example.com/" />

<xhtml:link rel="alternate" hreflang="es" href="http://www.example.com/es/" />

<xhtml:link rel="alternate" hreflang="fr" href="http://www.example.com/fr/" />

<xhtml:link rel="alternate" media="only screen and (max-width: 640px)" href="http://m.example.com/" />

<image:image>

<image:loc>http://www.example.com/image.jpg</image:loc>

</image:image>

<video:video>

<video:content_loc>http://www.example.com/video123.flv</video:content_loc>

</video:video>

</url>

Becomes

<url>

<loc>https://www.example.com/</loc>

<xhtml:link rel="alternate" hreflang="x-default" href="https://www.example.com/" />

<xhtml:link rel="alternate" hreflang="es" href="https://www.example.com/es/" />

<xhtml:link rel="alternate" hreflang="fr" href="https://www.example.com/fr/" />

<xhtml:link rel="alternate" media="only screen and (max-width: 640px)" href="https://m.example.com/" />

<image:image>

<image:loc>https://www.example.com/image.jpg</image:loc>

</image:image>

<video:video>

<video:content_loc>https://www.example.com/video123.flv</video:content_loc>

</video:video>

</url>

This example assumes that all content is moved to the HTTPS version. If this is not the case, only then update the relevant URL.

If possible, create XML Sitemaps for every subdomain or subsection of the website on both the HTTP and the HTTPS versions, and update the HTTPS versions accordingly. If the website is large, consider using XML Sitemaps Index files to group the different XML Sitemaps for each subdomain or subsection. Again, this will help at a later stage to track indexation numbers of both the HTTP and the HTTPS versions.

Resource Hints

If the website is using Resource Hints, such as dns-prefetch, preload, preconnect, prerender, prefetch, etc, then these also need to be updated. For example:

<link rel="preconnect" href="http://cdn.example.com" pr="0.42">

Becomes

<link rel="preconnect" href="https://cdn.example.com" pr="0.42">

Or

<link rel="preconnect" href="//cdn.example.com" pr="0.42">

Double check for Resource Hints in the HTTP Headers, the HEAD of the HTML, and in the Javascript code.

CSS and Javascript

Most websites depend heavily on assets, such as CSS for styling and Javascript for interaction. What most SEOs tend to forget when moving content is that often these assets may import or load other assets, such as images, other CSS or Javascript files on the same or other servers.

@import 'http://fonts.googleapis.com/css?family=Open+Sans';

Becomes

@import 'https://fonts.googleapis.com/css?family=Open+Sans';

Search all CSS and Javascript files for the "http://" pattern and test if this can be replaced with "https://" instead. If an asset is loaded using "//" at the beginning of the URL pattern, the asset is available on both HTTP and HTTPS, and the browser will automatically request the version of which the website is loaded (once the move is complete, this will be the HTTPS version).

Not all assets are, by default, available on HTTPS, especially those loading or imported from third party sources. In this case, double check with the third party if they have an alternative URL on HTTPS and/or consider copying the asset onto the same server as the website, and import/load it from there into the codebase and/or find an alternative third party source or asset to import/load.

HTTP Headers

HTTP Headers can be extremely powerful to communicate SEO signals to search engines while keeping the overhead in code base minimal. Often, the link annotations are stored in the PHP code, or in the .htaccess files of Apache servers, etc. For example, for .htaccess, the following can be applied:

<Files testPDF.pdf >

Header add Link 'http://www.example.com/ >; rel="canonical"'

</Files>

Always make sure to check the HTTP Headers of the website for any links, and when found, update them accordingly, for example:

Link: <http://www.example.com/es/>; rel="alternate"; hreflang="es"

Link: <http://www.example.com/>; rel="canonical"

Link: <http://example.com>; rel=dns-prefetch

Becomes

Link: <https://www.example.com/es/>; rel="alternate"; hreflang="es"

Link: <https://www.example.com/>; rel="canonical"

Link: <https://example.com>; rel=dns-prefetch

Or

Link: <//www.example.com/es/>; rel="alternate"; hreflang="es"

Link: <//www.example.com/>; rel="canonical"

Link: <//example.com>; rel=dns-prefetch

Structured Data

Search engines want data to be structured and SEOs are often happy to provide this, hopeful that the search engines will better understand the content and increase the visibility of the website in organic search. Schema.org is the default and primary structured data repository at the moment of writing. Luckily, the content of Schema.org is supported on both HTTP and HTTPS, so this can be used in the code base.

Update any absolute URL references in the structured data used on the website, and all Schema.org references, to HTTPS. For example:

{

"@context": "http://schema.org",

"@type": "WebSite",

"name": "Your WebSite Name",

"alternateName": "An alternative name for your WebSite",

"url": "http://www.your-site.com"

}

Becomes

{

"@context": "https://schema.org",

"@type": "WebSite",

"name": "Your WebSite Name",

"alternateName": "An alternative name for your WebSite",

"url": "https://www.example.com"

}

It is possible to utilize "//" for the URL in this example, but not for the context reference. For example, this works too:

{

"@context": "https://schema.org",

"@type": "WebSite",

"name": "Your WebSite Name",

"alternateName": "An alternative name for your WebSite",

"url": "//www.example.com"

}

However, this is not valid according to the Google Structured Data Testing Tool:

{

"@context": "//schema.org",

"@type": "WebSite",

"name": "Your WebSite Name",

"alternateName": "An alternative name for your WebSite",

"url": "//www.example.com"

}

Check for JSON-LD, Microdata, RDFa or other possible structured data references in the code base, and when found, update the protocol of every URL referenced in the structured data to HTTPS.

RSS / Atom feeds

Another item that is often overlooked are the RSS and/or Atom feeds of a website. Although RSS/Atom usage has faded since the shutdown of Google Reader, it is still used a lot by feed reader alternatives and other programs that utilize syndication.

Check if the website has any feeds, and when found, verify on the HTTPS version if the HREF annotations (the link to the article) to the content and the in-content-link references (a link in an article) are updated to HTTPS. If not, depending on which platform the feeds are generated, it may be necessary to talk to the web developers or the IT team and update all links to absolute HTTPS URL (don't use just "//" as it is unknown where the content may be syndicated to, and if this runs on HTTP or HTTPS).

Accelerated Mobile Pages

If the website is AMP-enabled, the link references to AMP URL in the source code need to be updated to the absolute HTTPS version. For example:

<link rel="amphtml" href="http://www.example.com/amp/">

Becomes

<link rel="amphtml" href="https://www.example.com/amp/">

In addition, any internal links, link references, canonicals, asset links, etc. in the source code of the AMP pages need to be updated to the relevant HTTPS version.

For more information about AMP, visit the AMP Project.

Cookies

It is also important that no cookies are sent unsecure. Allowing this can expose the data in a cookie, e.g. authentication data, in plain text to the rest of the world. Double check the server settings so that cookies are secure. For example, with PHP check the php.ini file for the following:

session.cookie_secure = True

With ASP.NET set the following in the web.config file:

<httpCookies requireSSL="true" />

Verify the setting by accessing a page that sets a new cookie and check the HTTP Headers for the following:

Set-Cookie: mycookie=abc; path=/secure/; Expires=12/12/2018; secure; httpOnly;

Moving to HTTPS

Now that the content is prepared and updated for the HTTPS version, it is time to move the website.

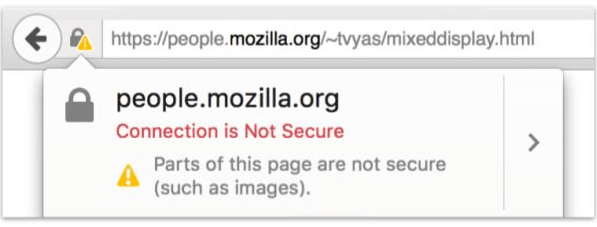

Crawl HTTPS Version

Before completing the switch from the HTTP to the HTTPS version and going live with the HTTPS version to the outside world, Googlebot included, a safety check needs to be performed.

Crawl the entire HTTPS version, and while crawling, check for the following:

- Any CSS, images, Javascript, Fonts, Flash, Video, Audio, Iframes being loaded insecurely through HTTP instead of HTTPS;

- Any Redirects to the HTTP version;

- Any Internal Links, Canonicals, Hreflang, and/or Structured Data, etc. pointing to the HTTP version;

- Any 40x or 50x Errors in the Server Log Files for the HTTPS version.

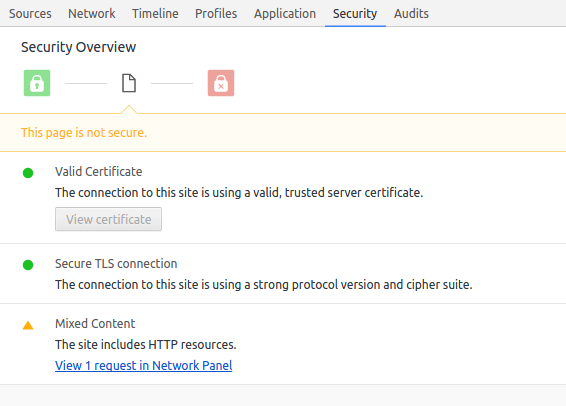

Example of a security audit in Google Chrome Developer Tools, highlighting mixed insecure content

Example of a security audit in Google Chrome Developer Tools, highlighting mixed insecure content

When no errors occur, continue to the next step.

Important: Limit the crawl to the HTTPS version, do not crawl the HTTP version.

Barry Schwartz

[CEO of RustyBrick]

I believe the most common challenges when it comes to transitioning to HTTPS is the mixed content issue. Making sure that when you go HTTPS, that all the content within the page is also able to be served up securely. That includes images, videos, your comments, social sharing buttons and embeds. Then you also don’t want to lose your social share counts, so hacking the count URL to use the http URL might be something you want to consider, if the social sharing button plugin doesn’t support it correctly. But overall, I think the most frustrating part, espesially for a large web site, are the mixed content errors.

Updating the XML Sitemap (Again)

Extract all the URL from crawl of the HTTPS version and compare this list with the URL mentioned in the XML Sitemaps. Find out which URL are live and indexable on the HTTPS version and do not have an entry in the XML Sitemaps. Update the XML Sitemaps with the missing indexable URL found in the crawl of the HTTPS version.

Redirects

Now that all content has been moved and updated, new redirection rules need to be implemented to redirect all HTTP traffic to the relevant HTTPS versions. A simple catch-all Apache solution can be used in the .htaccess of the HTTP version:

RewriteEngine On

RewriteRule ^(.*)$ https://www.example.com/$1 [R=301,L]

This redirects any deep pattern on the HTTP version to the HTTPS version. However, this makes the robots.txt and XML Sitemaps on the HTTP version inaccessible as this catch-all redirection rule redirects any request for these files to the HTTPS version. To prevent this from happening, an exemption rule needs to be added. For example, in the .htaccess for Apache on the HTTP version, this may look like:

RewriteEngine On

RewriteRule (robots.txt|sitemap.xml)$ - [L]

RewriteRule ^(.*)$ https://www.example.com/$1 [R=301,L]

This will redirect every request on the HTTP version to the HTTPS version, except requests for the robots.txt and sitemap.xml files.

Move Through Canonicals

When moving content through canonicals, wait for implementing the redirection rules until enough of the critical content is indexed and served from the HTTPS version. Once Googlebot has seen most or all of the content on HTTPS, the redirection rules can be pushed live to force Googlebot and the user to the HTTPS version.

Reduce Redirect Chains

When implementing the new redirection rules, double check the old redirection rules and update these to point directly to the new HTTPS end destination. Avoid a redirect chain like: HTTP A redirects to HTTP B, which in turn redirects to HTTPS C.

Also keep in mind that some systems may add or remove trailing slashes by redirecting them to the other variation on the same protocol, resulting in additional redirects. For example:

http://www.examples.com/dir redirects to http://www.examples.com/dir/, which redirects to https://www.examples.com/dir, which redirects to the final destination https://www.examples.com/dir/.

More efficient will be to redirect any of the following URL:

http://www.examples.com/dir

http://www.examples.com/dir/

https://www.examples.com/dir

Directly to:

https://www.examples.com/dir/

When creating the new redirection rules, check if it is beneficial to make the trailing slash optional in the regular expression for the redirection rule. For example:

RewriteEngine On

RewriteRule ^(dir[\/]?)$ https://www.example.com/dir/ [R=301,L]

If needed, this tool can be utilized to test the new redirection rules.

Naked domain vs WWW

While writing the new redirection rules, choose a primary hostname and set up redirection rules for the non-primary to the primary version on HTTPS. For example, when the WWW hostname is the primary HTTPS version, let's also redirect all naked domain URL on the HTTPS version to the primary WWW on HTTPS version:

RewriteEngine On

RewriteCond %{HTTP_HOST} ^example.com [NC]

RewriteRule ^(.*)$ https://www.example.com/$1 [R=301,L]

Once all new redirection rules to the HTTPS version are live, continue to the next step.

Crawl HTTP Version (Again)

This time, find the earlier extracted URL from the Server Log Files, the XML Sitemaps, and the Crawl of the HTTP version. The names of the files may be:

Utilize a crawler such as Screaming Frog SEO Spider to crawl every URL and verify that all the redirections work as intended, and that every URL on the HTTP version redirects to the correct HTTPS version.

When all is working as intended, continue to the next step.

Replace robots.txt

At this stage, the robots.txt on the HTTPS version needs to be updated.

Copy the robots.txt file from the HTTP version to the HTTPS version and update the Sitemap reference to the new Sitemap file. For example:

User-Agent: *

Disallow:

Sitemap: https://www.example.com/sitemap.xml

Configuring Google Search Console

Now that the content has been moved to the HTTPS version, the redirections on the HTTP version are in place and the XML Sitemaps and robots.txt have been updated, it is time to go to Google Search Console and let Google know about the update.

Adding Sites Variations

A minimum of five variations of the domain name need to be present in Google Search Console. These are as follows:

http://example.com

http://www.example.com

https://example.com

https://www.example.com

example.com

The latter is the domain property, and you will need DNS verification to complete this one. Once you added this one, the other URL prefix properties (the first four) will be quick and simple to add.

Verify and add the ones that are currently not present in Google Search Console.

When the website has any subdomains in use, or any subdirectories separately added to Google Search Console, then these also need to be added for both the HTTP and HTTPS versions. For example:

http://m.example.com

https://m.example.com

Create Set

Since May 2016, Google Search Console has been supporting grouping data of one or more properties as a set. This is extremely useful for the move to HTTPS. So add a set with every relevant HTTP and HTTPS property in the Google Search Console. For example, add the following properties to one set:

http://example.com/

https://example.com/

http://www.example.com/

https://www.example.com/

When using subdomains and/or subdirectories for specific geographic targeting, add additional sets for each geographic target with every relevant HTTP and HTTPS version. For example, add the following to sets:

Set 1:

http://www.example.com/nl/

https://www.example.com/nl/

Set 2:

http://de.example.com/

https://de.example.com/

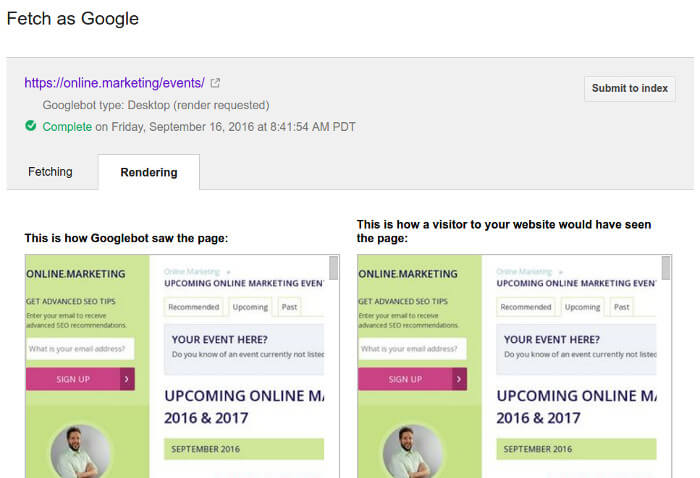

Test Fetch and Render

To make sure everything works as intended for Googlebot, use the Fetch and Render tool in Google Search Console to fetch and render:

- Go to the homepage of the HTTP version and verify it redirects properly. If everything checks out, click the "Submit to Index" button;

- Once in the homepage of the HTTPS version, verify that it renders correctly. If everything checks out, click the "Submit to Index" button and select the "Crawl this URL and its direct links" option when prompted.

Example of the Fetch and Render tool in Google Search Console

Example of the Fetch and Render tool in Google Search Console

Note: The submission to the index will also notify Googlebot of the HTTPS version and it requests Googlebot to start crawling it.

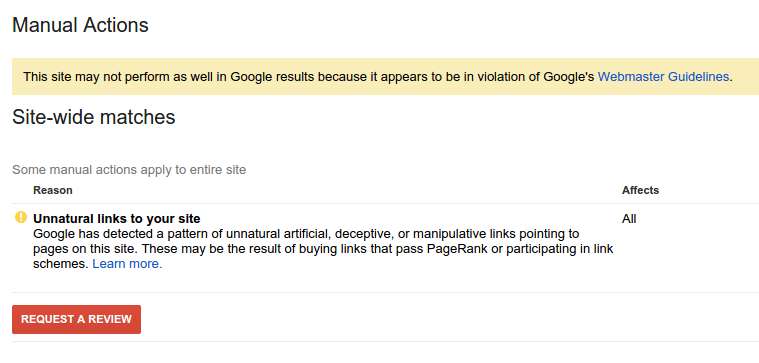

Verify Manual Actions

Before anything else, double check there are no manual actions holding back the migration of the website by going to the Manual Actions overview in Google Search Console for the old primary HTTP version.

Example of a Manual Action notification in Google Search Console

Example of a Manual Action notification in Google Search Console

If there are any manual actions present, hire some trusted Google Penalty Consultants to help address these Google penalties as soon as possible. While this process is started, continue to the next step.

Preferred Domain Settings

To make sure the preferred domain is set correctly, go to the primary HTTPS property in Google Search Console and click on the gear icon (upper right) and click on Site Settings. Verify that the preferred domain is set to the primary version.

If not set, update the setting with the primary version.

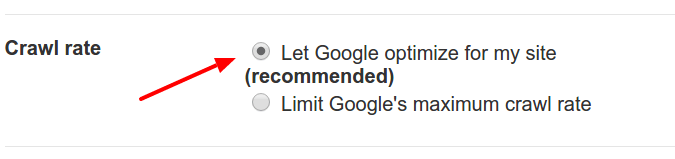

Crawl Rate

Most Search Engine Optimizers do not change this setting in Google Search Console, but if anyone with access has changed the Crawl Rate in Google Search Console in the past for the HTTP property, then this may also need to be updated in the HTTPS property.

Check in the old primary HTTP property in Google Search Console, by clicking on the gear icon (upper right) and click on Site Settings. If this does not say "Let Google optimize for my site", then remember the current setting and go to the primary HTTPS property in Google Search Console, click again on the gear icon and Site Settings, and change the Crawl Rate to the equivalent on the HTTP property.

Example of Crawl Rate settings in Google Search Console

Example of Crawl Rate settings in Google Search Console

When unsure if anything needs to be changed, check with the IT team of the organization. Keep in mind, ideally from an SEO perspective, this setting is not to be changed unless absolutely necessary.

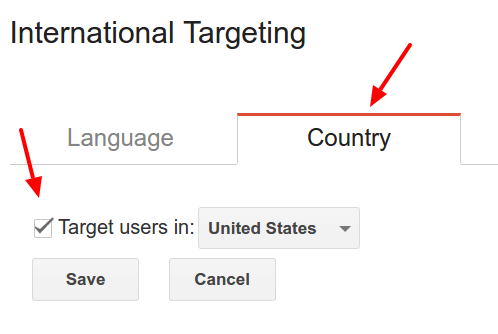

Geotargeting

If the website is not a common country top level domain, chances are that the international targeting in Google Search Console has to be/was set manually. Verify the old primary HTTP property in Google Search Console if any international targeting is present and changeable with a pull down, and if so, go to the primary HTTPS version and change the international targeting to the same region.

Example of International Country Targeting settings in Google Search Console

Example of International Country Targeting settings in Google Search Console

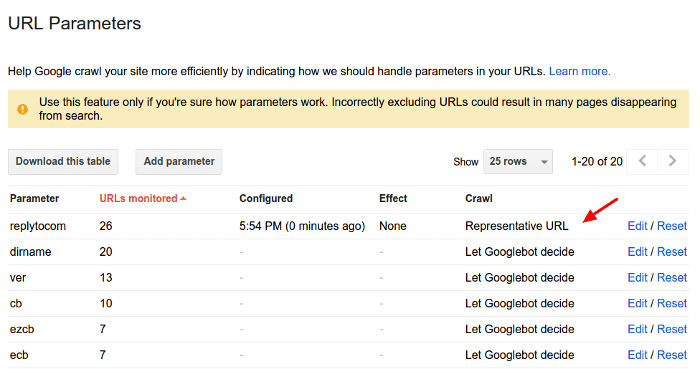

URL Parameters

Go to the URL Parameters tool in the old primary HTTP property and check if any URL parameters are crawled. If yes, download the table of URL Parameters and its settings.

Example of URL Parameters settings in Google Search Console

Example of URL Parameters settings in Google Search Console

Now go to the URL Parameters tool in the primary HTTPS version and add and categorize one-by-one the downloaded URL Parameters from the old primary HTTP version. Completing this step may assist Googlebot to focus its limited crawl budget and prioritize the important URL when crawling the new HTTPS version of the website.

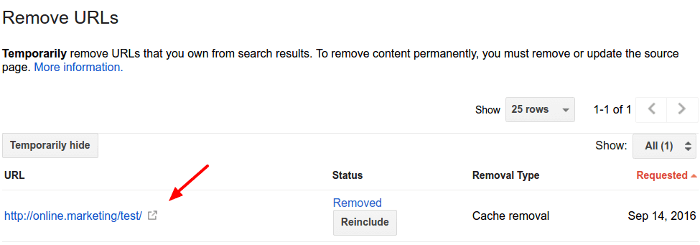

Removed URLs

To prevent sensitive URL of the HTTP version to be reindexed and served as a search result in Google Search, go to the URL Removal tool in Google Search Console for the old primary HTTP version and check if there are any URL submitted for temporary removal. If any are present, then write these down and go to the URL Removal tool for the primary HTTPS version and add each pattern one-by-one.

Example of the URL Removal tool in Google Search Console

Example of the URL Removal tool in Google Search Console

Note: The effect of the URL Removal tool is just temporary. If certain URL need to be permanently removed from Google Index, remove the specified URL from the HTTPS version and return a 404 or add a meta noindex to the specified URL to prevent it from being indexed as Googlebot crawls the new HTTPS version.

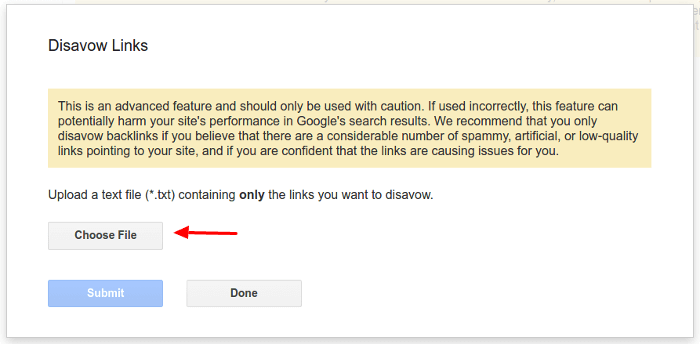

Disavow file

To prevent any backlink issues (such as Google Penguin or a manual action to be applied) for the new HTTPS version, go to the Disavow tool in Google Search Console for the old primary HTTP version and check if there is a disavow file present. If yes, download it and rename it from .CSV to .TXT. Next, go to the Disavow tool for the primary HTTPS version and upload the renamed TXT file on Google Search Console.

Example of the Disavow tool in Google Search Console

Example of the Disavow tool in Google Search Console

If no disavow file is present or if the file has not been updated in awhile, prevent the website from being held back in reaching its full potential in Google rankings due to a risky backlink profile, and hire Google SEO Consultants to assist with a full manual backlink analysis and a new disavow file.

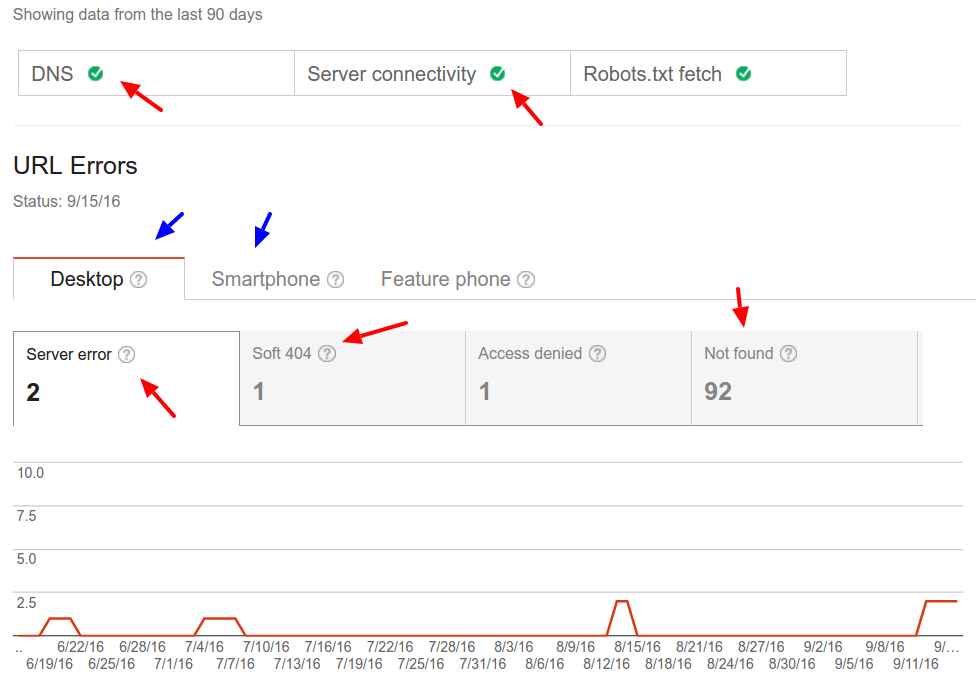

Crawl Errors

To avoid any trust issues with server response codes, let's have a look at the Crawl Errors overview in Google Search Console for the old primary HTTP version. In particular, check if there Soft 404's reported by Google. If so, it is important to fix these by returning the 404 status codes for these URL. As Googlebot does not favour Soft 404's, it is important to avoid duplicating from the HTTP version any potential Soft 404 issues on the primary HTTPS version. To learn more about why Googlebot dislikes Soft 404, click here.

Example of error reports in Google Search Console

Example of error reports in Google Search Console

Submit XML Sitemaps

Earlier in this guide, a number of XML Sitemaps have been created, updated, and placed in the roots of the relevant subdomains and/or subdirectories and/or the primary hostname, for both the HTTP and the HTTPS version. These XML Sitemaps contain only indexable and crawlable URL (preferably canonicals), extracted from the old XML Sitemaps, the Server Log Files, and from crawling the HTTP and HTTPS versions of the website. For example:

http://www.example.com/sitemap.xml

http://de.example.com/sitemap.xml

http://www.example.com/nl/sitemap.xml

And

https://www.example.com/sitemap.xml

https://de.example.com/sitemap.xml

https://www.example.com/nl/sitemap.xml

Test and submit each XML Sitemaps to the relevant Google Search Console properties, based on protocol and/or subdirectories and/or subdomains.

Sam Hurley

[Managing Director @ OPTIM-EYEZ]

When making the transition to HTTPS, it can actually cause more harm than good if care isn’t taken to ensure smooth deployment.

It’s not unusual to experience some ranking fluctuations throughout the process – but there are steps you can take to minimise any negative impacts.

After all, moving to HTTPS is a cool upgrade that portrays trust and future-proofs your website.

That said, here are the most common issues I’ve witnessed after the switch:

Not forcing HTTPS

If you don’t set up a redirect from the HTTP version of your domain, you will end up with TWO versions of the same website. That means TWO websites indexed in Google and confusion for the search engine caused by duplicated content - not a good impression for visitors and potential customers either.

Some choose to keep both versions live and add canonical tags, though I really don’t recommend doing so - keep it simple.

Confusing your third-level domains

For example, ‘www’ is a third-level domain. It is possible for all the following versions of your site to be live and indexable at the same time:

https://www.domain.com

https://domain.com

http://domain.com

http://www.domain.com

Again, not good for search, backlinks or usability.

Not updating internal links

After making the switch, every internal link should point directly to the HTTPS version. You should not have redirects taking place on navigational links when the power is in your hands to amend. That’s just lazy and adds bumps in the road for the effective dissemination of on-site ‘link juice’; it’s basic housekeeping.

Not adding your HTTPS site to Google Search Console (formerly Webmaster Tools)

This is a standard addition – don’t forget it! You should have both the HTTP and HTTPS versions of your site added into Search Console for diagnostics.

Not ensuring your certificate is site-wide and applied site-wide

Basic stuff but you’d be surprised how many times I’ve seen site owners believing they own a site-wide SSL/TLS certificate yet it is only applied on their checkout section!

Not setting your SSL certificate to auto-renew

Yes! If your certificate expires, your checkout pages will not be secured and visitors will be greeted with horrible messages telling them so. Oh dear...

Not ensuring all page elements are secure

Even when your SSL/TLS certificate is valid...if you haven’t correctly set images and every other page element to redirect to HTTPS, visitors will see the same message above.

Slower load times

An SSL/TLS cert can occasionally impact site speed to a noticeable degree. If this happens to you, be sure to collectively speak with your host, certificate provider and also your web developer to see what can be done (there will usually be a workaround).

It’s always best to hire a techy to lock down your website – there are many facets to the process and a lot that can go wrong without due care. Ultimately, these issues will affect trust and conversions over time.

Finalizing move to HTTPS

At this point, everything has been done for Google to prefer the HTTPS version of the website and for Googlebot to best understand the move to HTTPS for the website. Depending on the size of the website crawled and the crawl budget available, it may take anytime from a few weeks to a few months for the switch to be mostly visible on Google Search.

In addition, it is also important to monitor how the move to HTTPS progresses in Google Search, and whether any new issues arise.

Monitor Server Logs

If something is not working as intended, and fix it as soon as possible, make sure there is a notification system in place, and monitor for any 50x or 404 requests being made to either the HTTP or HTTPS version of the website. A good place to find these errors is in the Server Log Files. However, it is also possible to send a message by email or to a Slack channel at the moment they occur. Working with the IT team together makes sure that there is manpower available to resolve any critical errors as soon as possible. Highlighted 404 errors may be a false alarm, but make sure these are not unexpected, and check why they are occurring and if they need fixing.

84.25.65.243 - - [10/Oct/2016:13:55:36 -0700] "GET /favicon.icof HTTP/1.1" 200 326 "http://www.example.com/start.html" "Mozilla/4.08 [en] (Win98; I ;Nav)"

2.5.45.7 - - [10/Oct/2016:13:55:45 -0700] "GET / HTTP/2" 200 2956 "-" "Mozilla/5.0 (iPad; U; CPU OS 3_2_1 like Mac OS X; en-us) AppleWebKit/531.21.10 (KHTML, like Gecko) Mobile/7B405"

64.233.191.102 - - [10/Oct/2016:13:55:49 -0700] "GET /home HTTP/1.1" 200 43455 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

When possible, also use the system to keep track of which pages Googlebot is indexing, how fast and which status codes Googlebot is primarily crawling. As this data is best mined from the Server Log Files, it may be useful to utilize an ELK stack on a local server or a third party log analyzer, such as Botify, Screaming Frog Log Analyser or Splunk. Alternatively, load the Server Log Files into Google BigQuery and use the Google BigQuery interface or the 360 Data Studio to analyze the data.

Example of Screaming Frog Log Analyser with data

Example of Screaming Frog Log Analyser with data

Monitor Google Search Console

To monitor the indexation numbers of the HTTP and the HTTPS version, keep an eye on all submitted XML Sitemaps. This combined with the Google Index Status, as reported in Google Search Console, will indicate how many of the URL of the HTTP version are being de-indexed and updated to the HTTPS version.

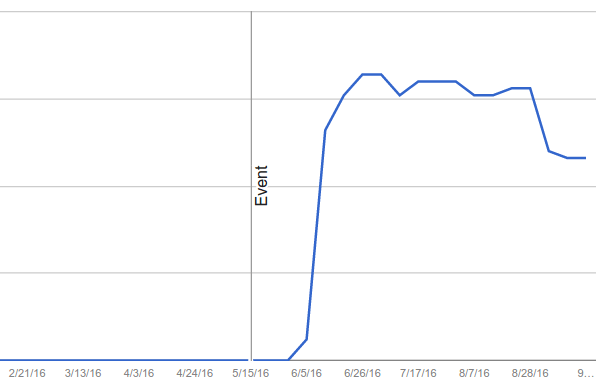

Example of the Index Status of a new HTTPS website in Google Search Console

Example of the Index Status of a new HTTPS website in Google Search Console

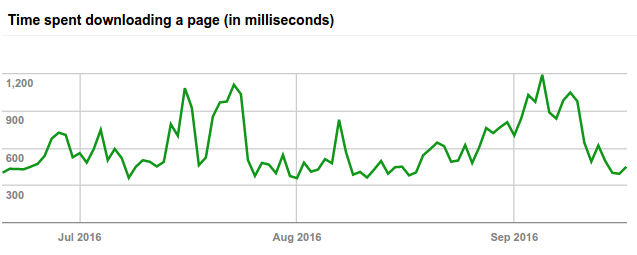

Also monitor the Crawl Stats overview of the relevant HTTP and HTTPS versions for crawl speed and make sure the infrastructure responds fast to Googlebot. If needed, and when possible, consider adding additional and more powerful server instances with improved network speed connections to the infrastructure for the next few months while Googlebot re-crawls both the HTTP and the HTTPS version of the website. If this has any effect on getting the website faster crawled, it will only be visible in the Crawl Stats and Server Log Files.

Example how fast Googlebot is downloading a page

Example how fast Googlebot is downloading a page

Monitor the AMP overview, Rich Cards overview, and Structured Data overview of the HTTPS version in Google Search Console to see if any indexation issues pop up, such as errors and the speed of finding the data. If they do, fix these as soon as possible.

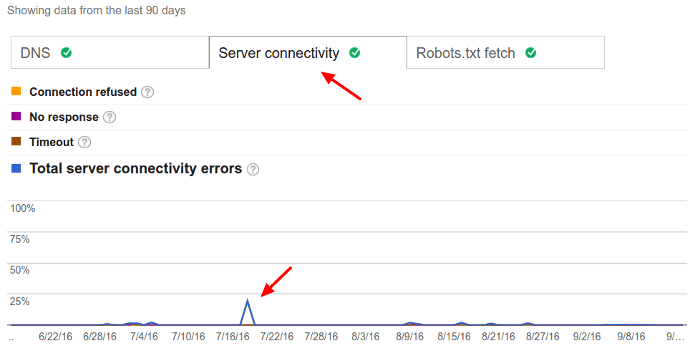

Monitor the Crawl Errors overview of the relevant HTTP and HTTPS versions in Google Search Console to see if there are any issues with Server Errors (50x), Not Found (404), Connection Issues and Soft 404's for Desktop, feature phones and/or smartphones. If any Server Errors, Connection Issues or Soft 404's appear, especially on the HTTPS version, fix these as soon as possible. Highlighted Not Found errors may be a false alarm, but make sure these are not unexpected, and check why they are occurring and if they need fixing.

Example of connection issues reported in Google Search Console

Example of connection issues reported in Google Search Console

Last but not least, monitor Search Analytics in Google Search Console. Earlier in this guide, different sets have been created to group the different properties. This includes one set with all the relevant properties -to this content move- of the HTTP and HTTPS versions. Now, it is time to reap the benefits of doing this.

By combining all the relevant HTTP and HTTPS properties, it is possible to track in Google Search Analytics the overall impact of the move the HTTPS in the Google rankings, according to Google itself.

The thing is that when content is moved from HTTP to HTTPS, in each Google Search Console property the Search Analytics is only recorded and shared for that particular property. This results in the HTTP property to lose all traffic and rankings and the HTTPS version to gain it. However, it may take more than 90 days to get a good overview of the impact, and Search Analytics in Google Search Console is only available for the last 90 days.

The combined set will provide an overview of the total rankings and total impact of the move to HTTPS, which is also available for download, using the Search Analytics API and/or a download button in the Search Analytics overview page.

Update Incoming Links

Now that the website is fully migrated to HTTPS, the rest of the world needs to be told. If the website is part of a larger network of websites belonging to the same organization, then go to each website in the (internal) network and update all references of the website to the HTTPS version. This may require talking to different teams in the organization and/or searching within the databases to find and update all link references to the HTTPS version.

Often, links to the website are also present in email signatures of every employee, the official social profiles page of the website and/or organization (e.g. Twitter, Facebook, Pinterest, etc.), and the company profile pages on LinkedIn and/or Yellow Pages and/or Wikipedia and local business directories, PPC campaigns and ads, social media ad campaigns, newsletter software and/or mailing lists, direct marketing ad copy, videos, Analytics software, third party ranking tracking and reporting software, Google My Business listings, business cards, third party review platforms, etc. Not all of these need to be updated straight away, but do change what can be as soon as possible, and be sure to schedule the rest to make sure the changes are made in the near future.

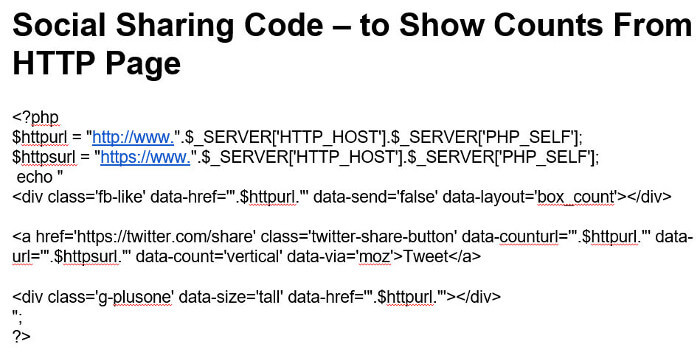

If social counts need to be preserved, check out this article by Michael King, or the advice from Eric Enge below.

Eric Enge

[Founder and CEO Stone Temple Consulting]

The two challenges we see most often are:

- Third party code / images / widgets /… which are not https. So what happens is they end up throwing off errors when those pages are accessed. Pretty much every site that goes through a conversion runs into some of these issues.

- Social share counts get broken. This happens because the social sites don’t treat the URL: http://example.com/contentpiece137 as the same URL as https://example.com/contentpiece137. If you have a piece of content with 1,000 shares, when you change to HTTPS, the social widget will show 0 shares for that content as the https version of that page will show 0 shares. This can be disconcerting!

However, fixing it is quite doable. You can implement some code that will pull in the social share count for the http page to show on your https page.

You should put this code ONLY on the pages that were published before they were switched over to https. You’ll want to use your standard social widget code on pages published after the switch (as the http version of their URL will have no shares on them).

Last but not least, go to the Links to Your Site overview in Google Search Console and find the most important links to the website. This may be the important business partners, news coverage or domains that link often to the website. Reach out to these websites and inform them that the website has moved to HTTPS. Maybe, this is also a great opportunity to communicate what your team can do for them and see if a business collaboration is possible.

Content-Security-Policy

To avoid any mixed content issues, be sure to add a Content Security Policy to the website. This policy is aimed at restricting certain resources to be loaded and prevents XSS attacks. However, in this guide, the focus is only on the upgrade-insecure-requests feature.

Upgrade-insecure-requests is used to upgrade any internal link reference, to assets and/or other internal pages, from a HTTP request to a HTTPS request in the browser. For example, the following link references in the source code:

<a href="http://www.example.com/">example</a>

<img src="http://www.example.com/image1.png" />

Are automatically upgraded by the browser to:

<a href="https://www.example.com/">example</a>

<img src="https://www.example.com/image1.png" />

To enable this with .htaccess in Apache, just add the following HTTP header:

Header set Content-Security-Policy "upgrade-insecure-requests"

Alternatively, use the following code in the HTML source of the HTTPS version:

<meta http-equiv="Content-Security-Policy" content="upgrade-insecure-requests" />

This will force all links to be upgraded to HTTPS, and this may break things in the website. Be sure to test the website if it looks and feels correct.

HSTS

HSTS, which stands for HTTP Strict Transport Security, is used to prevent redundant redirections in browsers for websites that operate solely on HTTPS.

To explain this in other words, ask yourself (or your users): "How often does anyone type the protocol into the address bar of a browser when typing a domain name?"

Adding the 'S' to HTTP

Adding the 'S' to HTTP

Most likely the answer is: "Almost never." The problem is that browsers default to HTTP when a domain name is typed in, which means that when a domain name (without protocol) is typed in, the browser requests the HTTP version, e.g. http://www.example.com, and then, assuming that the redirection rules are set up correctly, the browser gets from the server a 301 response with a new location, e.g. https://www.example.com/. Now, the browser has to start the entire process all over again and send the next request to the HTTPS version before any content can be downloaded and presented to the user.

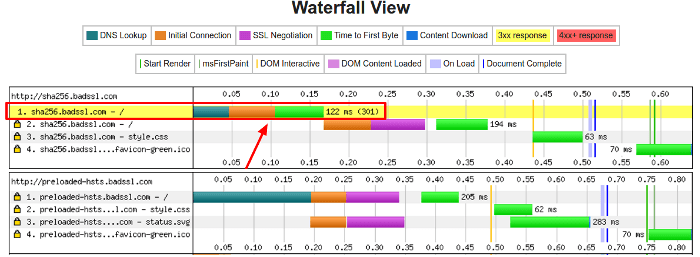

Waterfalls for websites not HSTS preloaded and HSTS preloaded

Waterfalls for websites not HSTS preloaded and HSTS preloaded

Hence, HSTS came to live. With HSTS, a website that is operating solely and entirely on HTTPS can submit their domain name to be preloaded into the browser. This means that there is a hard coded list maintained by the browser teams, with a long list of domain names that are all just operating on HTTPS. If the domain name typed into the address bar is in this list, the browser does not make a connection to the server, but instead redirects the user straight away to the HTTPS version using a 307 (Internal Redirect) without making any other request to the HTTP version. This acts like a 301 redirect, except it is generated by the browser instead of the server, and the HTTPS version of the website now loads a lot faster in the browser (a few milliseconds instead of 100+ milliseconds) and redundant HTTP redirects are avoided.

Submit for preloading

To qualify for HSTS preloading in browsers, the website needs to adhere to the following conditions:

- Use a valid SSL certificate;

- Redirect all HTTP requests to the HTTPS version;

- All hostnames, including all subdomains, need to serve content from the HTTPS version;

- The naked domain needs to serve the HSTS Header, even when this pattern redirects.

Here is an example of the most common and valid HSTS Header:

Strict-Transport-Security: max-age=63072000; includeSubDomains; preload

It is considered the best practice to always serve the HSTS Header with every request on the HTTPS version. With Apache, this can be accomplished in the .htaccess file of the HTTPS root directory:

<IfModule mod_headers.c>

Header set Strict-Transport-Security "max-age=63072000; includeSubDomains; preload"

</IfModule>

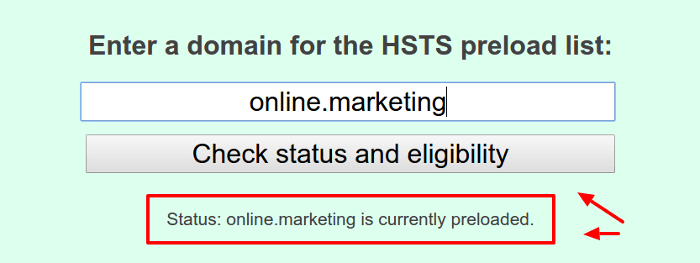

Once all conditions are met, test and submit the website for HSTS preloading.

Testing and submitting for HSTS preloading

Testing and submitting for HSTS preloading

Caveat

There is one major caveat to be aware of before submitting the domain name for HSTS preloading to browsers, and this is that it is hard to go back. Once submitted and approved for HSTS preloading, the domain name is added to new and future browser updates.

If the domain name is removed again, upon request by the site owner, it can take months before browsers have processed this and years before most of the browser users have upgraded their browser to the latest version (consider how long IE6 was still used after it was no longer supported by Microsoft). During this time, browsers still using the preload list with the domain name will be unable to access any URL of the unsecure HTTP version and upgrade these to the HTTPS version.

Make an informed decision as this is a long-term commitment, with no easy way out. However, given the page speed advantages, it is most often worth it. Start slow with a short max-age and monitor if this impacts your users. Slowly increase the max-age to the number recommended above, and once all looks good, go ahead and submit the domain name for HSTS preloading.

HTTP/2 and Resource Hints

Finally, now that the website is running smoothly on HTTPS, it is possible to take advantage of one of the biggest plus-points of HTTPS and that is being able to utilize HTTP/2. HTTP/2 is an upgrade of the older HTTP/1.1 protocol, and improves a number of things including speed. Most modern browsers support HTTP/2, but only when the website is on HTTPS. As the website now runs on HTTPS, it is possible to upgrade the server software to HTTP/2 and utilize preload resource hints to make the website smart when communicating with a client browser and to load websites faster.

Garth O'Brien

[Director & Global Head of SEO @ GoDaddy]

Securing your data and your customer data is becoming more important every day. Cyber-attacks are on the rise and more frequent impacting small websites and behemoths like Yahoo. It is imperative site owners secure their websites by deploying an SSL certificate. An SSL Certificate will require your website transition from the HTTP protocol to the HTTPS protocol. Seems like a simple task right? Well there are various pitfalls that can make this transition a huge dumpster fire.

First, you need to determine which SSL certificate is appropriate for your site or sites. There are quite a few of them; Wildcard, Extended Validation, Organization Validation and Multi-Domain SAN to name a few. An SSL comparison chart is a good place to start in determining which certificate best suits your needs. It is also a good idea to discuss this with someone in your IT department or contact your web hosting provider directly. Believe me you do not want to deploy the wrong certificate.

After you have purchased the correct SSL certificate and have deployed it you are not done. You must 301 redirect all of your legacy HTTP URLs to the new HTTPS URLs. This step is critical if you want to maintain your Organic Search presence. Any time you change your URLs you must provide notice to the search engines like you would alert the US Postal Service that you have moved to a new residence ensuring your mail arrives at your new mailbox. A 301 redirect transfers all the valuable search engine authority of the legacy URLs and passes that onto to the new URLs. If you do not complete this task, then you have created a bunch of 404 errors (or dead-ends) because the search engines will continue to surface your legacy URLs in the search results pages.

This will directly impact your keyword rankings which in turn will result in a steep decline in Organic Search referral traffic. To avoid this follow these simple steps:

Before deploying the SSL Certificate- Create a list of every single URL published on your website

- Develop your 301 redirect process and have it ready to deploy

- DO NOT DEPLOY YET

- Review your HTML and XML Sitemaps

- If they are automated then they should update after you deploy the SSL Certificate

- If they are manual, then update these files, but do not deploy the updated files yet

- Deploy your SSL Certificate

- Test and confirm your website URLs have transitioned from HTTP to HTTPS

- Check all of them

- If all of your URLs now render as HTTPS URLs, then deploy your 301 redirects

- Confirm your HTML and XML Sitemaps have updated showing the new HTTPS URLs if these are automated

- If these files are manual then deploy the new files you prepared before you deployed the SSL Certificate

- Ensure all your internal links across your entire website utilize the new HTTPS URLs and not the legacy HTTP URLs.

If you follow the steps above then there should be no complications in transitioning from HTTP to HTTPS URLs. However, you should review your Webmaster Tools accounts, web analytics and keyword ranking solutions and monitor your Organic Search performance. If there is a steep decline then you will need to contact an SEO expert immediately. Do not let a steep decline continue because it may takes months to recover once the issue is resolved.

Conclusion

The switch to HTTPS is a major technical challenge, which is not to be underestimated. Temporary fluctuations in organic search visibility are likely to be expected, no matter how thorough the planning and implementation are being done.

Moreover, any hiccups in the transition phase can cause a ripple effect and further disrupt the sites visibility in search engines. While the process is ongoing, other major updates to the website must be put on hold or risk confusing search engines further.

Not every website needs to undergo the switch to HTTPS. Tim Berners-Lee raises a valid point with, "HTTPS Everywhere is harmful". However, from an user experience and an SEO perspective, website owners can no longer ignore HTTPS. Hopefully, this guide will assist in the transition.

Wanna Know More?

I admire your interest. Check out the video and presentation slides from SMX Paris on how to do Successful SEO with HTTPS.

Courtesy of Webcertain.tv